We can select that attribute as the Root Node. Once we calculate the Information Gain of every attribute, we can decide which attribute has maximum importance. So to answer the particular question, we need to calculate the Information Gain of every attribute. That is the first question we need to answer. Once we choose one particular feature as the root note, which is the following attribute, we should choose as the next level root and so on. From these four attributes, we have to select the root node. In the Weather dataset, we have four attributes(outlook, temperature, humidity, wind). Given a set of data, and we want to draw a Decision Tree, the very first thing that we need to consider is how many attributes are there and what the target class is, whether binary or multi-valued classification. The first question that comes to our mind while drawing a Decision Tree.

Decision tree entropy how to#

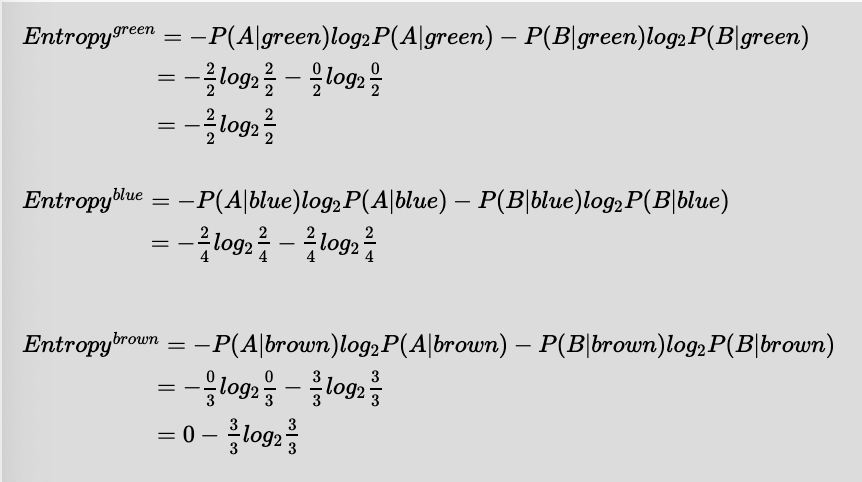

This article will demonstrate how to find entropy and information gain while drawing the Decision Tree. When adopting a tree-like structure, it considers all possible directions that can lead to the final decision by following a tree-like structure. A decision tree can be a perfect way to represent data like this. What if Monday's weather pattern doesn't follow all of the rows in the chart? Maybe that's a concern. Now, to determine whether to play or not, you will use the table. To decide whether you want to play or not, you take into consideration all these variables. How do you know whether or not to play? Let's say you go out to check whether it's cold or hot, check the pace of wind and humidity, what the weather is like, i.e., sunny, snowy, or rainy. Let's say on a particular day we want to play tennis, say Monday. To understand the concept of a Decision Tree, consider the below example. Regression Tree: The target variable is a continuous variable. Leaf nodes : Terminal nodes that predict the outcome.Ĭlassification Tree : The target variable is a categorical variable.Edges/ Branch : Correspond to the outcome of a test and connect to the next node or leaf.Nodes : Test for the value of a certain attribute & splits into further sub-nodes.Root Node : First node in the decision tree.As a result, the partitioning can be represented graphically as a decision tree. The prediction models are constructed by recursively partitioning a data set and fitting a simple model to each partition. Second Law of Thermodynamics in Terms of Entropyĭecision Trees are machine learning methods for constructing prediction models from data.

0 kommentar(er)

0 kommentar(er)